Caches are a source of unpredictability since it is very difficult to predict if a memory access results in a cache hit or miss. In systems running multiple tasks steered by a preemptive scheduler, it is even impossible to determine the cache behavior since interrupt-driven schedulers lead to unknown points of time for context switches. Furthermore, it is even unknown at which address the execution of a preempted task continues, hence it is unknown which cache line is evicted next. An unknown cache behavior forces a static WCET analyzer to conservatively assume a cache miss for every memory access, thus implying a highly overestimated system's WCET.

Cache partitioning is a technique to make the I-cache behavior more predictable. Each task of a system is assigned to a unique cache partition. The tasks in such a system can only evict cache lines residing in the partition they are assigned to. As a consequence, multiple tasks do not interfere with each other any longer w.r.t. the cache during context switches. This allows to apply static WCET analyses for each individual task of the system in isolation. The overall WCET of a multi-task system using partitioned caches is then composed of the WCETs of the single tasks given a certain partition size, plus the overhead required for scheduling including additional time for context switches.

Cache partitioning can be realized in software without any modification of a cache's hardware. For this purpose, the code of each task has to be scattered over the address space such that tasks are solely mapped to only those cache lines belonging to the task. Thus, a task's executable code is split into many chunks which are stored in non-consecutive regions in main memory. To make sure that a task's control flow remains correct after splitting it into chunks, additional jump instructions between these chunks must be inserted into the code. To generate non-contiguous chunks of a task's code, the linker needs to be provided with dedicated relocation information for each task. Since WCC automatically generates linker scripts, software-based cache partitioning is easy to realize in this compiler.

The remaining challenge consists of determining a partition size per task such that a multi-task system's overall WCET is minimized. In the following, preemptive round robin scheduling of tasks is assumed and the period pi of each task ti ∈ {t1, ..., tm} is known a priori. The length of the entire system's hyper-period is equal to the least common multiple of all tasks' periods pi. The schedule count ci then reflects the number of times, each task ti is executed within a single hyper-period. Furthermore, possible cache partition sizes sj measured in bytes are given: sj ∈ {s1, ..., sn}.

WCET-aware software based cache partitioning is modeled within WCC using integer linear programming (ILP). A binary decision variable xi,j is set to 1 if and only if task ti is assigned to a partition of size sj. To ensure that a task is assigned to exactly one partition, a constraint of the following shape is added for each task ti:

xi,1 + xi,2 + ... + xi,m = 1

Another constraint in the ILP ensures that the amount of used cache partitions does not exceed the I-cache's total capacity S:

x1,1 * s1 + x1,2 * s1 + ... + x2,1 * s2 + x2,2 * s2 + ... ≤ S

It is assumed here that the WCET WCETi,j of each task ti if executed once using a cache partition of possible size sj is given. This is achieved by performing a WCET analysis of each task for each partition size before generating the ILP for software based cache partitioning. A task ti's WCET WCETi depending on the partition size used by the task can thus be expressed as:

WCETi = xi,1 * WCETi,1 + xi,2 * WCETi,2 + ... + xi,m * WCETi,m

The objective function of the ILP models the WCET of the entire task set for one hyper-period. This overall WCET is defined as:

WCET = c1 * WCET1 + c2 * WCET2 + ... + cm * WCETm

The ILP finally only has to minimize this variable:

WCET: minimize

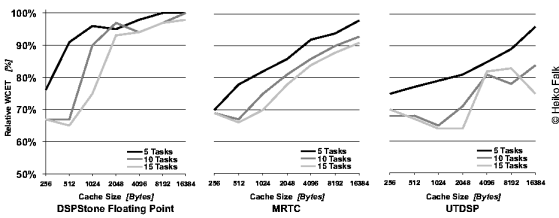

The above figure shows the WCETs for WCET-aware cache partitioning and three different benchmark suites. Since no multi-task benchmark suites currently exist, randomly selected task sets from single-task benchmark suites were used. The figure shows results for task sets consisting of 5, 10 and 15 tasks, respectively. Each individual point in the figure's curves denotes the average value over 100 randomly selected task sets of a certain size. Benchmarking was done for I-cache sizes ranging from 256 bytes up to 16 kB. All results are given as a percentage, with 100% corresponding to the WCETs achieved by a standard heuristic that uses a partition size per task which depends on the task's code size relative to the code size of the entire task set. All results are generated using WCC's highest optimization level -O3.

As can be seen, substantial WCET reductions of up to 36% were obtained. In general, WCET savings are higher for small caches and lower for larger caches. For DSPstone, WCET reductions between 4% and 33% were achieved. For the MRTC benchmarks, an almost linear correlation between WCET reductions and cache sizes was observed, with maximal WCET savings of 34%. For the large UTDSP benchmarks, WCET reductions of up to 36% were finally observed. In most cases, larger task sets exhibit a higher optimization potential so that WCC's cache partitioning achieves higher WCET improvements as compared to smaller task sets.

WCET-aware Software Based Cache Partitioning for Multi-Task Real-Time Systems