Modern caches can be controlled by software. The software can load parts of its code or of its data into the cache and lock the cache afterwards. Cache locking prevents the cache's contents from being flushed by deactivating the replacement. A locked cache is highly predictable and leads to very precise WCET estimates, because the uncertainty caused by the replacement strategy is eliminated completely. This page presents techniques exploring the lockdown of instruction caches at compile-time to minimize WCETs.

For real-time systems with hard timing constraints, many cache architectures are problematic due to their unpredictability. Since they are hardware controlled, it is virtually impossible in many cases to determine the latency of a memory access. Thus, WCET estimates may be heavily overestimated in the presence of caches. Modern caches allow to lock their contents, i.e. to protect it from being flushed by disabling the replacement. This way, it is possible to predict access times of data or instructions that have been locked in the cache, and to make precise statements about the cache's worst-case timing.

This page presents techniques for compile-time decided I-cache lockdown to minimize WCETs. A set of functions of a program is determined that is locked into the cache at system startup time. During the whole execution time of the program exploiting cache locking, the locked cache's contents remains invariant ("static I-cache locking"). Cache contents selection is done such that the set of selected functions leads to the highest WCET reductions, under simultaneous consideration of switching worst-case execution paths (WCEPs).

The entire approach for WCET-aware static I-cache locking works in the following three stages:

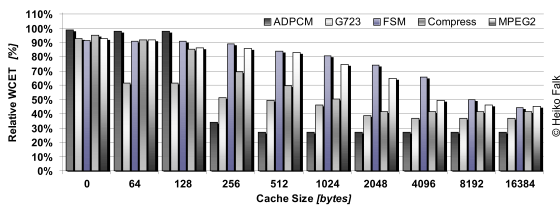

The above figure depicts the effect of static WCET-aware I-cache lockdown on the resulting WCETs. It shows the WCETs of the benchmarks after I-cache locking as a percentage of the WCETs without cache locking. The 100% base line thus reflects the WCETs of the benchmarks for a system with I-cache in normal operation mode.

As can be seen from this figure, the proposed techniques achieve significant WCET reductions for all cache sizes. Already for a very small I-cache of 64 bytes, lockdown leads to improvements between 2% (ADPCM) and 38.3% (G723). The large WCET reduction for G723 is caused by locking two very small but WCET-critical functions for absolute value computation and quantization.

With increasing I-cache sizes, even higher WCET reductions were achieved since more functions were locked in the I-cache, thus leading to a proportionally higher predictability of instruction fetches. Per benchmark, a monotonic decrease of WCETs was observed with increasing I-cache sizes. For some benchmarks (G723, MPEG2), smoothly decreasing WCET curves were obtained. This smooth decrease shows that many small functions lie on the WCEP of these benchmarks. By increasing the I-cache size by only a few bytes, these benchmarks benefit since the additional cache space is used for lockdown of functions on the WCEP.

In contrast, ADPCM exhibits a stepwise WCET decrease with increasing cache sizes. Here, the steep WCET reduction down to 34% when increasing the cache size from 128 bytes to 256 bytes stems from the lockdown of a system library function for integer division. This system function is heavily used within ADPCM during sine and cosine computations. When increasing the I-cache size further to 512 bytes, another reduction of WCET down to 27% was observed. Hereafter, a saturation is reached so that caches larger than 512 bytes do not lead to more reductions of the WCET of this benchmark.

The overall largest WCET reductions were achieved for an I-cache size of 16 kB which is the size of the ARM920T's native configuration. For this scenario, WCET reductions between 54.6% (MPEG2) and 73.1% (ADPCM) were measured. On average over all five benchmarks, a WCET reduction of 60.1% was achieved for a 16 kB I-cache.