Loop-Invarian Code Motion (LICM) is a well-known ACET optimization. It recognizes computations within a loop that produce the same result each time the loop is executed. These computations are called loop-invariant code and can be moved outside the loop body without changing the program semantics. The positive effects of LICM are:

Besides these positive effects on the code, LICM may also degrade performance. This is mainly due to two reasons. First, the newly created variables to store the loop-invariant results outside the loop, may increase the register pressure in the loops since their live ranges span across the entire loop nest. As a result, possibly additional spill code is generated. Second, LICM might lengthen other paths of the control flow graph. This situation can be observed if the invariants are moved from a less executed to a more frequently executed path, e.g., moving instructions above a loop's zero-trip test.

Issues like the impact of LICM on the register pressure emphasize the dilemma compiler writers are faced with during the development of good heuristics. Performing loop-invariant code motion has conflicting goals, and it can not be easily predicted if this transformation is beneficial.

A review of standard compiler literature revealed that LICM heuristics are unknown or at least not published. Also, there is no related work concerning this optimization except standard algorithms describing how the optimization can be realized in practice. On the software side, a similar situation can be observed. Most compilers do not model the complex interactions between different parts of the code and the loop invariants, but perform LICM whenever invariants are found without using any heuristics that might avoid the adverse effects. To tackle this problem, we automatically generate WCET-aware heuristics for WCC's assembly level LICM using machine learning techniques.

In contrast to WCC's machine learning based Function Inlining, the selection of a machine learning algorithm is done in a systematic way. This process is called automatic model selection. WCC's automatically evaluates six popular learning algorithms, such as random forests or support vector machines (SVM), to find that algorithm that generates a heuristic leading to the highest WCET reduction.

Besides the choice of a learning algorithm, the choice of the learners' parameters are crucial. Exhaustively searching over all combinations of user-defineable classifier parameters is not feasible. We therefore apply an evolutionary strategy. Each individual in a population represents a combination of parameter values of the algorithm under analysis. In the beginning, the parameters of each individual are initialized randomly. To create a new generation, a fraction of the individuals repeatedly takes part in a tournament selection which chooses individuals with highest performance (i.e., highest WCET reduction) to be possibly processed by the crossover and mutation operator.

WCC extracts numerous static features from the assembly code to be optimized. These features are exploited by supervised learning to induce a machine learning model serving as LICM heuristic. The following features are automatically extracted:

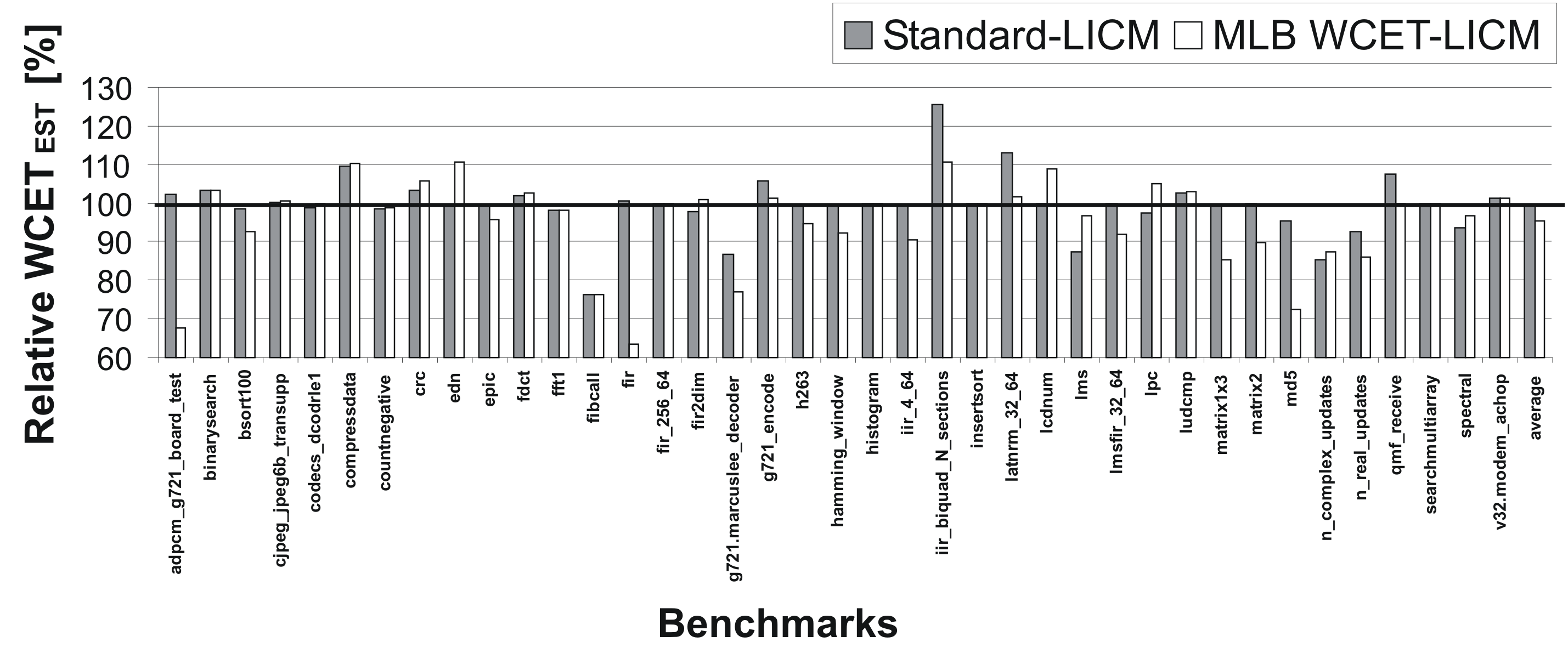

In order to evaluate the effectiveness of our machine learning based LICM heuristic, we measured the impact of our MLB heuristics for LICM on the WCET estimates of 39 representative benchmarks. The above figure shows a comparison between the standard ACET LICM (Standard-LICM) and our optimization (MLB WCET-LICM) using the best heuristic generated by the Support Vector Machine learner. The reference mark of 100% corresponds to the WCET estimates for O3 with disabled LICM. Due to the challenges for the manual generation of an appropriate heuristic, the standard approach for LICM in many compilers is the application of the code transformation whenever possible. The light bars representing the MLB-LICM show WCET estimates computed during the benchmark-wise cross validation. By learning a model and validating it on the excluded benchmark, the light bars indicate how good the heuristic performs on unseen data.

As can be seen in the figure, in most cases the new MLB-LICM outperforms the standard LICM optimization, with up to 36.98% for the fir benchmark from the MRTC WCET Benchmark Suite. On average, the standard LICM achieves a WCET reduction of merely 0.56%, while our MLB-LICM reduces the WCET by 4.64%.

Automatic Selection of Machine Learning Models for WCET-aware Compiler Heuristic Generation